Article author information:

-

Kate Starbird (@katestarbird) is co-Founder of the Center for an Informed Public at the University of Washington and associate professor in the Department of Human Centered Design & Engineering. She studies the spread of rumors and misinformation during crisis events.

-

Emma Spiro (@emmaspiro) is co-founder of the Center for an Informed Public and assistant professor at the University of Washington Information School, where she studies online information and social behaviors in the context of crisis events.

-

Jevin West (@jevinwest) is co-founder of the Center for an Informed Public and associate professor at the University of Washington Information School, where he studies misinformation in and about science, and co-author of a forthcoming book on skepticism in a data-driven world. (Random House, 2020).

-

This work has been supported by grants from the John S. and James L. Knight Foundation and the Hewlett Foundation for the University of Washington's Center for an Informed Public, as well as research grants from the National Science Foundation (nos. 1715078 and 1749815).

-

COMMENTARY (BuriedTruth). Undisclosed conflict-of-interest: the Lincoln Network (President: Aaron Ginn, the author of the disinformation article under discussion) is partly funded by the Knight Foundation and the Hewlett Foundation -- as are this article's authors (refer above)!

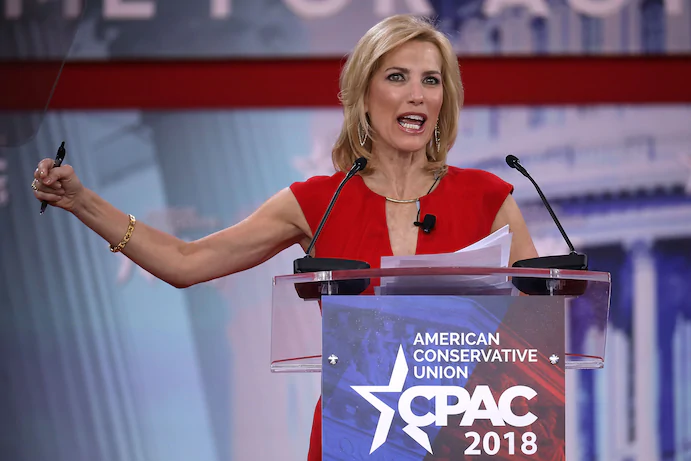

FOX News host, disinformation troll Laura Ingraham.

[Image source. Click image to open in new window.]

In a crisis, people struggle collectively to make sense of a complex and frightening situation -- and as a result, misinformation spreads. For several reasons, that's been especially true during the novel coronavirus pandemic. Scientists are still trying to understand everything about the virus's disease, COVID-19, including how it spreads and which treatments work. Armchair epidemiologists are filling the Internet with their own interpretations of the emerging science.

But partisan polarization interacting with social media and cable news have amplified this dramatically, quickly spreading problematic beliefs about COVID-19. Here's one key example.

A COVID-19 article went -- well -- viral

On March 20 2020, Aaron Ginn [President of the Lincoln Network] wrote a Medium article titled "Evidence over Hysteria -- COVID-19" and tweeted it out. He presented scientific findings and statistics related to the virus, arguing that the health risks were overstated and that social distancing would hurt the economy. This article was heavily criticized by experts (including our University of Washington colleague biologist Carl Bergstrom) for misrepresenting the science and promoting misunderstanding. About 32 hours after it was posted, Medium removed the article for violating its platform policies.

Our research suggests that the article initially received little attention on Twitter. But on March 21 2020 around 6:45 a.m. Eastern time, the article suddenly "went viral," rapidly gaining visibility through tweets and retweets, surging to approximately 1,000 tweets an hour for several hours. Ginn's number of Twitter followers quadrupled in two days, from 4,000 to more than 16,000. This burst of attention was short-lived -- Medium removed the article about 13 hours after the article began to spread widely, while Twitter added a safety warning to the link, discouraging users from clicking on it.

We used new techniques to show how it spread

Our research team has studied how social media users, including those with initially small social networks, rapidly gain attention -- and build potentially lasting audiences -- during crises. Working with student Kaitlyn Zhou, we developed a way of depicting this.

The graph below shows both the observed rate at which a piece of content is shared, and its resulting exposure through the different accounts that share it. Over time (measured by the x-axis), you can see the increase in the total number of tweets linking to the article (measured by the y-axis). However, the circles and diamonds also help you see how specific accounts with more than 10,000 followers help spread the word. The circles show regular tweets that link to the story, while the diamonds show tweets that quote content. When an account sends out a new tweet about the content, we color it red, and when it just retweets someone else's tweet, we color it blue. The bigger the circle or diamond, the larger the number of followers of that account -- and consequently, the exposure of that tweet.

This helps us understand not only how quickly the article is spread across Twitter, but also which communities it is reaching and who is helping to push it.

-

Cumulative graph of tweets linking to the "Evidence over hysteria" Medium article Time = EDT.

[Image source. Click image to open in new window.]

This provides important information

What does this tell us about the way that "Evidence over hysteria" spread across Twitter? First, it tells us that "Evidence over hysteria" was initially a slow burner on Twitter. Some viral posts -- usually those posted by an account that already has a large audience -- take off quickly. Some require an external booster or boosters, growing in a pattern that looks like an S-shape: starting slow, picking up steam and eventually reaching thousands of users, before leveling off.

At first, the "Evidence over hysteria" article spread very slowly. It began to take off after FOX News' Brit Hume tweeted it early March 21 2020. Hume's account (@brithume) had over 1 million followers and his tweet was retweeted 5,551 times in our data. Bret Baier, another FOX News personality with a large Twitter following (@BretBaier), was one of the retweeters. Not long after Baier's retweet, FOX News host Laura Ingraham (@IngrahamAngle) posted her own tweet linking to the article.

Both Baier's and Ingraham's tweets went out as the slope of the line showing the overall number of tweets increased. This suggests that they helped the article spread faster. Other right-wing media personalities, including James O'Keefe and Sebastian Gorka, then joined the amplification chain. However, the rate of tweets linking to the article slowed down considerably after Medium removed it from its platform.

Both Brit Hume and Bret Baier posted tweets the next day acknowledging criticisms of the article. Their tweets did not appear in our analysis, because they did not link to the article. Those corrections are commendable. However, corrections rarely receive as much attention as the original post, and those follow-up tweets did not spread as far as the original cascade, with roughly 750 retweets compared to roughly 15,000 tweets for the originals.

This is a typical story -- and that's a problem

The spread of this story demonstrates some common patterns. First, a small group of key social media influencers can amplify the spread of misleading information and boost the long-term profile of previously obscure authors. Second, social media platforms like Twitter interact quickly with other media like cable news; FOX News personalities played a key role in spreading the story.

Third, and most important, science is being politicized. The article was originally published by a Medium channel associated with the Lincoln Network, a conservative nonprofit organization, and spread by media personalities with the same political orientation. While the downstream spreaders and amplifiers probably weren't intentionally sharing misleading information, their choices presumably reflected their political priorities.

This is not unique to the political right; partisan media and influencers on the left also selectively amplify content that aligns with their political views and objectives. As media debate about pandemics becomes more partisan, it will be harder to persuade citizens to accept scientific information that pushes against their politics.

Return to Persagen.com